The meaning of 'bio-inspired' is as follows:

- Looking at the algorithms and functions in the natural world and thinking there must be something we can use computationally here

According to Wikipedia, bio-inspired computing (short for biologically inspired computing) is a field of study which seeks to solve computer science problems models of biology.

- It relates to connectionism, social behaviour, and emergence.

Within computer science, bio-inspired computing relates to artificial intelligence (AI) and machine learning (ML).

Bio-inspired computation has emerged as one of the most studied branches of AI during the last decades, according to the referece:

- Del Ser, Javier, et al. "Bio-inspired computation: Where we stand and what's next." Swarm and Evolutionary Computation 48 (2019): 220-250.

Brief overview of bio-inspired computing:

- 1950s: Cybernetics

- "the science of control and communication, in the animal and the machine" - Weiner, 1948

- "Co-ordination, regulation and control will be its themes, for these are of the greatest biological and practical interest" - Ashby, 1961

- 1960s: Connectionism (neural networks)

- "The perceptron is a minimally constrained 'nerve net' consisting of logically simplified neural elements, which has been shown to be capable of learning to discriminate and to recognise perceptual patterns" - F. Rosenblatt, 'Perceptron Simulation Experiments,' in Proceedings of the IRE, vol. 48, no. 3, pp. 301-309, March 1960, doi: 10.1109/JRPROC.1960.287598

- 1970s: Genetic algorithms

- The algorithms were introduced in the US by John Holland at University of Michigan

- 1980s: Artificial life

- 1990s: Onwards and upwards!

Genetic algorithms (GAs) are a type of search algorithm inspired by natural selection. They mimic the process of evolution to find optimal solutions to problems:

- GAs are probabilistic search procedures designed to work on large spaces involving states that can be represented by strings. A space is defined as the space of possible solutions to a problem.

- Probabilistic search: selection and breeding

- States: phenotype

- Strings: genotype

The complete set of genetic material, including all chromosomes, is called a genome.

- The genotype encodes the range of characteristics of the individual DNA (the set of genes in genome).

- DNA is passed from one generation to the next: heredity

- The phenotype is the actual individual that manifests in the world.

Genotype is set at birth, determined by the DNA inherited from the parents. It's fixed at conception. Phenotype is influenced by both genotype and environment. While genotype provides the blueprint, the environment plays a role in shaping the phenotype. For example, even with a genotype for tallness, nutrition can affect how tall someone becomes.

- Nature vs. Nurture:

- DNA = Nature

- Environment = Nurture, defining where in these ranges the characteristics actually fall

The environment plays a crucial role in shaping evolution. It exerts selective pressure on phenotypes, favouring those that are better suited to survive and reproduce.

- These successful phenotypes, with their underlying genotypes, are more likely to be passed on to future generations (heredity).

GAs are inspired by the principles of Darwinian evolution. In GAs, we simulate a population of individuals with varying traits (analogous to phenotypes):

- Popluation and Variation

: We start with a population of candidate solutions, each representing a potential answer to the problem. Each solution has its own unique set of characteristics (like genes in an organism). - Selection

: We then select solutions that perform well based on a defined fitness function (similar to how successful phenotypes survive in nature). - Reproduction

: These 'fit' solutions are then used to create new solutions (like breeding in evolution). Techniques like crossover (combining characteristics) and mutation (introducing variations) are used to mimic the processes of inheritance and random genetic changes.

Advantages of GAs:

- Finding optimal solutions

: A key advantage of GAs is their ability to locate both local and global maxima (points of highest fitness) within a given search space. This makes them superior to older 'hill-climbing' algorithms that can get stuck at local maxima. - Exploring combinations

: GAs go beyond simply testing individual components. They employ a technique called hyperplane sampling. This essentially means they evaluate various different combinations of these components, mimicking how different genes interact in an organism. This allows GAs to explore a broader range of potential solutions and potentially discover more optimal combinations.

How GAs work:

- Selection

: Imagine a roulett wheel where each slice represents a member of the population. The size of a slice is determined by a 'fitness function' that evaluates how well that member solves the problem.- The fitter a member, the larger its slice, giving it a higher chance of being selected for reproduction. This mimics how natural selection favours organisms better suited to their environment.

- Hyperplane sampling and schemata

: GAs don't just evaluate individual components of a solution, like bricks in a wall. They can also test different combinations of these components (like building different wall structures).- This allows them to find better overall solutions by exploring how different components work together. The schema theorem is a complex concept that supports this ability of GAs.

- Parallelism

: GAs can leverage parallelism to speed up the search process. There are two main types:

- Implicit parallelism: This uses the population model itself to explore multiple solutions simulaneously. Imagine pairs competing in a tournament, with the winners progressing to the next round.

→ In implicit parallelism, you can only evaluate pairings of individuals in sequence, once at a time. - Computational parallelism: If you have a computer with multiple cores, you can use them to evaluate several combinations of individuals at the same time, significantly speeding up the search.

→ In computational parallelism, you can evaluate several combinations of individuals at the same time, depending on how many cores you have on your processor.

- Implicit parallelism: This uses the population model itself to explore multiple solutions simulaneously. Imagine pairs competing in a tournament, with the winners progressing to the next round.

'ArtificialIntelligence > Concept' 카테고리의 다른 글

| (w05) Ethics of game playing AI (0) | 2024.05.06 |

|---|---|

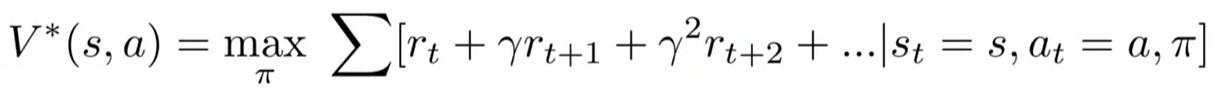

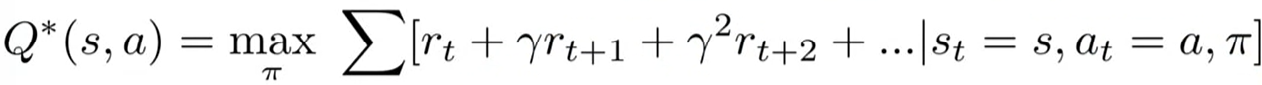

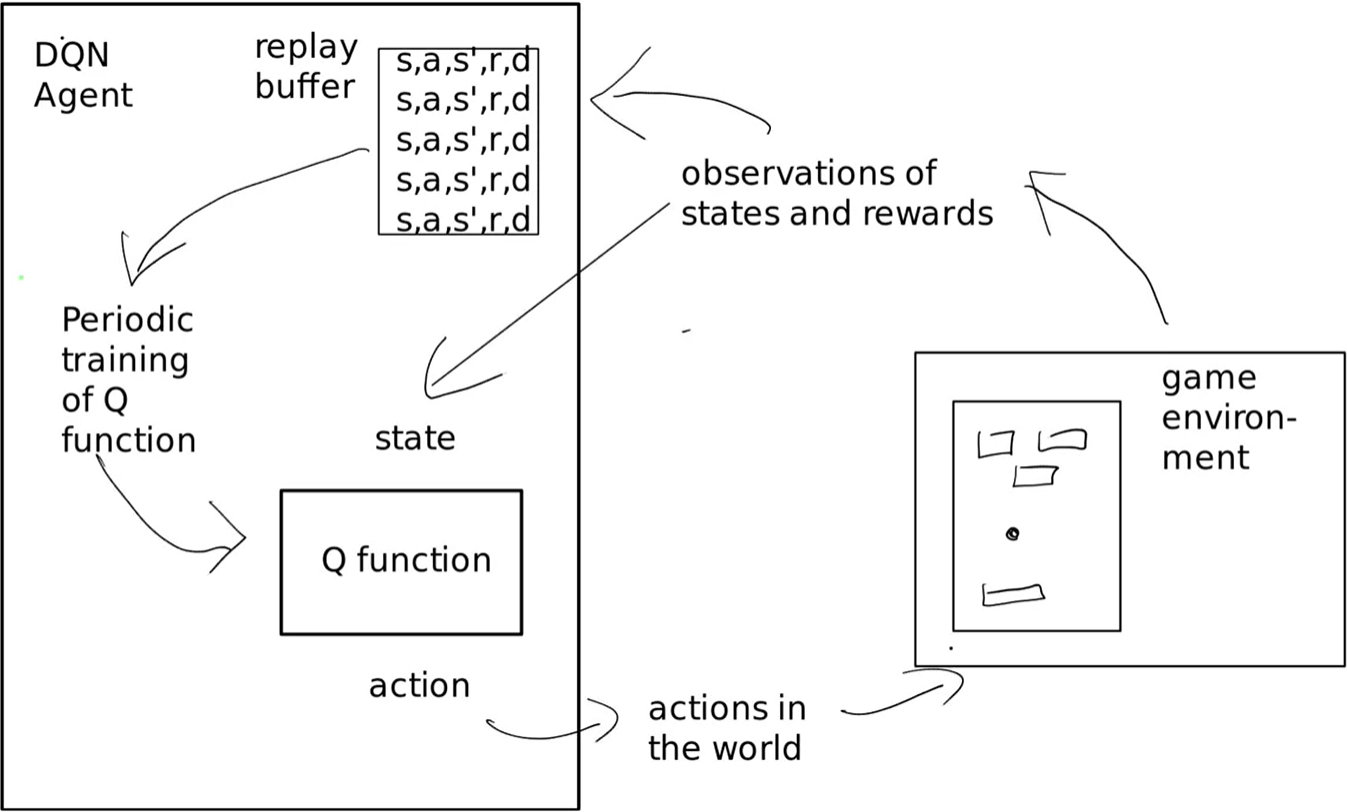

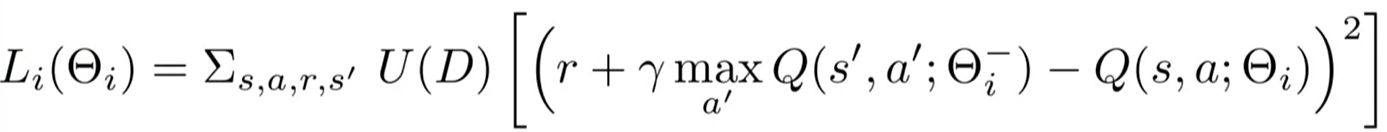

| (w02) Markov Decision Process and Deep Q Network (0) | 2024.04.16 |