Both the gradient vector and Hessian matrix are used in optimisation, particularly in continuous optimisation problems.

The gradient vector is a vector of the first partial derivatives of a scalar-valued function f(x) with respect to its n variables:

$$ \bigtriangledown f(x)= { \left [ \frac{\partial f}{\partial _{x_1}}, \frac{\partial f}{\partial _{x_2}},..., \frac{\partial f}{\partial _{x_n}} \right ] }^T $$

The gradient vector is used to find the direction of steepest ascent or descent of a scalar-valued function, depending on the problem (minimisation or maximisation). Especially in machine learning, it is essential for training models via backpropagation, where it guides parameter updates to minimise loss functions.

The Hessian matrix is an n × n matrix of the second partial derivatives of the function:

$$ H_f(x) = \nabla^2 f(x) = \frac{\partial^2 f}{\partial x_i \partial x_j} = \begin{bmatrix} \frac{\partial^2 f}{\partial x_1^2} & \frac{\partial^2 f}{\partial x_1 \partial x_2} & \cdots & \frac{\partial^2 f}{\partial x_1 \partial x_n} \\ \frac{\partial^2 f}{\partial x_2 \partial x_1} & \frac{\partial^2 f}{\partial x_2^2} & \cdots & \frac{\partial^2 f}{\partial x_2 \partial x_n} \\ \vdots & \vdots & \ddots & \vdots \\ \frac{\partial^2 f}{\partial x_n \partial x_1} & \frac{\partial^2 f}{\partial x_n \partial x_2} & \cdots & \frac{\partial^2 f}{\partial x_n^2} \end{bmatrix} $$

In optimisation, the Hessian matrix is used to determine whether a point is a local minimum, local maximum, or saddle point, and to understand the curvature of the objective function for better convergence. Methods such as Newton's method and quasi-Newton methods use the Hessian to update parameters, leveraging both first and second derivatives to find optima more efficiently than gradient-only methods.

To generalise the gradient for functions with multiple outputs, the Jacobian matrix is used. It is a matrix of all first-order partial derivatives of a vector-valued function.

A vector-valued function f(x) is defined as:

$$ f(x)=\begin{bmatrix} f_1(x_1,x_2,...,x_n) \\ f_2(x_1,x_2,...,x_n) \\ \vdots \\ f_m(x_1,x_2,...,x_n) \end{bmatrix} $$

The Jacobian matrix of f(x) is:

$$ J_f(x) = \begin{bmatrix}

\frac{\partial f_1}{\partial x_1} & \frac{\partial f_1}{\partial x_2} &

\cdots & \frac{\partial f_1}{\partial x_n} \\

\frac{\partial f_2}{\partial x_1} & \frac{\partial f_2}{\partial x_2} &

\cdots & \frac{\partial f_2}{\partial x_n} \\

\vdots & \vdots & \ddots & \vdots \\

\frac{\partial f_m}{\partial x_1} & \frac{\partial f_m}{\partial x_2} &

\cdots & \frac{\partial f_m}{\partial x_n} \\

\end{bmatrix} $$

The Jacobian plays a key role in backpropagation (especially in layers with multiple outputs) in machine learning, and is also used in Newton's method for solving systems of nonlinear equations involving vector-valued functions.

'MachineLearning > Optimisation' 카테고리의 다른 글

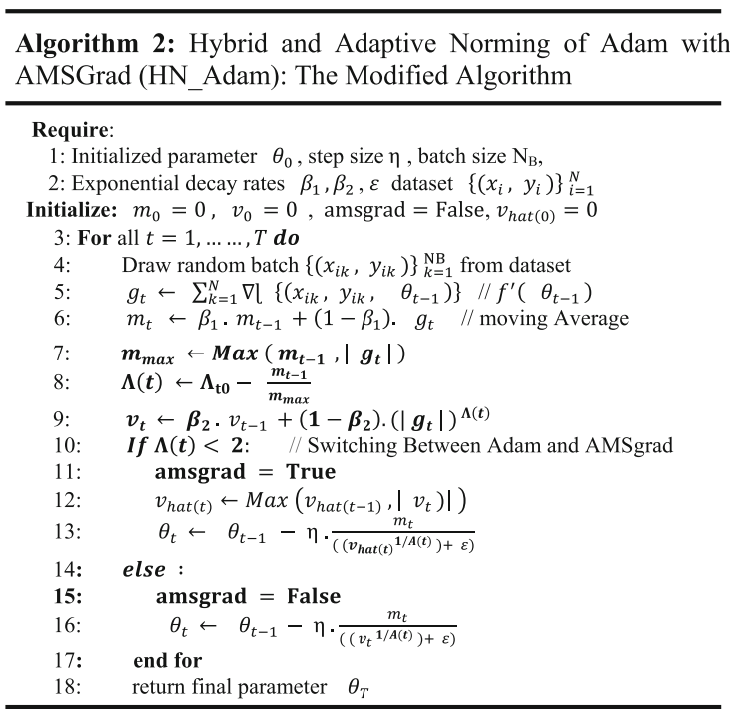

| SGD, ADAM, HN ADAM (3) | 2025.06.27 |

|---|---|

| Regularised least squares (RLS) (0) | 2024.01.22 |

| Least-squares problems (0) | 2024.01.16 |

| Mathematical optimisation problem (basic concept) (0) | 2024.01.16 |