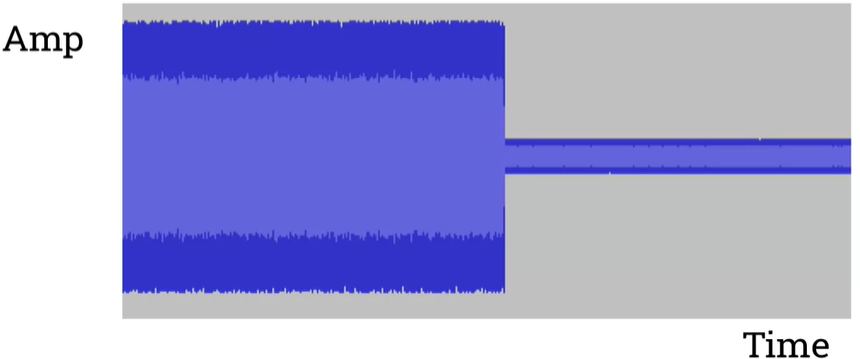

A simple scalar example of audio processing:

- Amplitude is on the y-axis.

- Time is on the x-axis.

Normalisation in audio signals allows us to adjust the volume (amplitude) of the entire signal.

- We can change the size of the amplitude in a proportionate way.

Normalisation in audio signals is a bit simpler than statistical normalisation. It involves two phases: analysis and scaling.

- Analysis phase

: In this phase, the signal is analysed to find the peak, or the loudest sample. This is essentially a peak-finding algorithm that identifies the highest amplitude in the waveform. - Scaling phase

: Once the peak is found, the algorithm calculates how much gain can be applied to the entire signal without causing clipping (distortion). This gain is then applied uniformly to the entire signal.

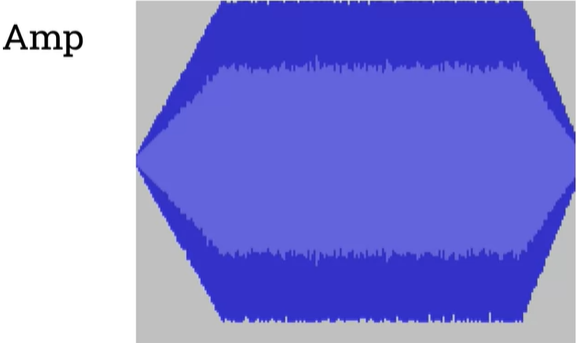

Linear ramps: fading in and out

- Fade in

: It starts with the scalar zero so that mutes the signal and then gradually as we go through that range, we're increasing the scalar up to one when it hits which would make no change to the signal so effectively turns back to the original signal. - Fade out

: It starts out with a high scalar at the beginning of the array of numbers that we're going to process. As the effect, as we've iterated over the numbers in the array, we will reduce that scalar down to zero and then obviously that would sound like the signal getting quieter.

'IntelligentSignalProcessing' 카테고리의 다른 글

| (w10) Offline ASR (Automatic Speech Recognition) system (0) | 2024.06.11 |

|---|---|

| (w06) Complex synthesis (0) | 2024.05.14 |

| (w04) Filtering (0) | 2024.05.01 |

| (w01) Digitising audio signals (0) | 2024.04.11 |

| (w01) Audio fundamentals (0) | 2024.04.11 |