Text is often referred to as unstructured data.

- Text has plenty of structure, but it is linguistic structure as it is intended for human consumption, not for computers.

Text may contain synonyms (multiple words with the same meaning) and homographs (one spelling shared among multiple words with different meanings).

People write ungrammatically, misspell words, run words together, abbreviate unpredictably, and punctuate randomly.

Because text is intended for communication between people, context is important.

The general strategy in text mining is to use the simplest technique that works.

- A document is composed of individual tokens or terms.

- A collection of documents is called a corpus.

Language is ambiguous. To determine structure, we must resolve ambiguity.

- Processing text data:

- Lexical analysis (tokenisation)

: Tokenisation is the task of chopping it up into pieces, called tokens. - Stop word removal

: A stopword is a very common word in English. The words the, and, of and on are considered stopwords so they are typically removed. - Stemming

: Suffixes are removed so that verbs like announces, announced and announcing are all reduced to the term accounc. Stemming also transforms noun plurals to the singular forms, so directors becomes director. - Lemmatisation

: A lemma is the canonical form, dictionary form, or citation form of a set of word forms. For example, break, breaks, broke, broken and breaking are forms of the same lexeme, with break as the lemma by which they are indexed. Lemmatisation is the algorithmic process of determining the lemma of a word based on its intended meaning. - Morphology (prefixes, suffixes, etc.)

- Lexical analysis (tokenisation)

- The higher levels of ambiguity:

- Syntax (part of speech tagging)

- Ambiguity problem

- Parsing (grammar)

- Sentence boundary detection

- Syntax (part of speech tagging)

'NaturalLanguageProcessing > Concept' 카테고리의 다른 글

| (w07) Lexical semantics (0) | 2024.05.22 |

|---|---|

| (w06) N-gram Language Models (0) | 2024.05.14 |

| (w04) Regular expression (0) | 2024.04.30 |

| (w02) NLP evaluation -basic (0) | 2024.04.17 |

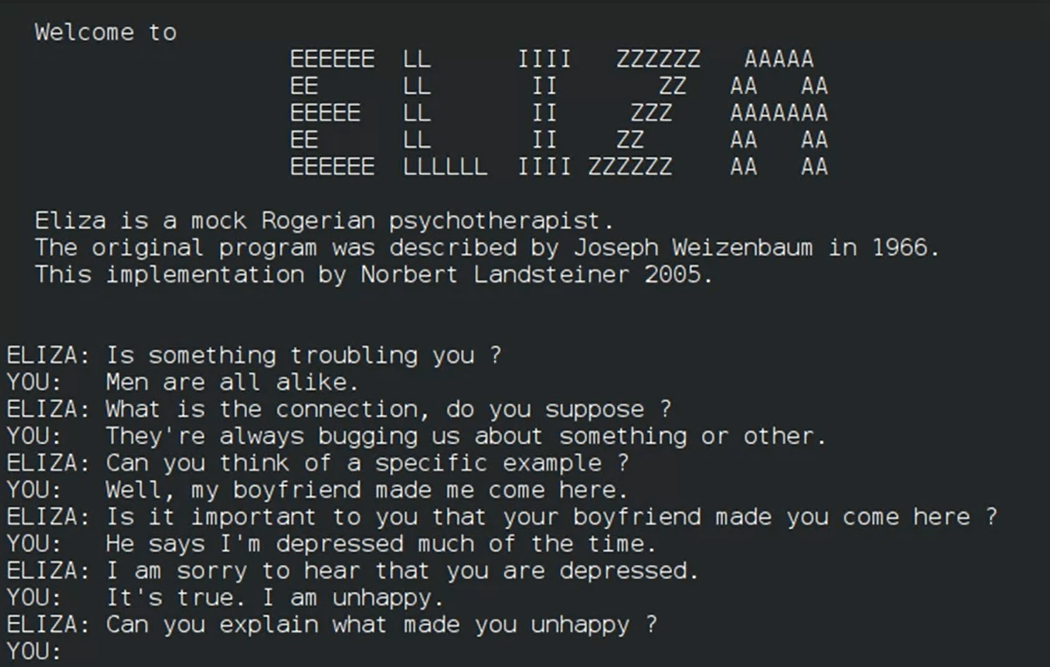

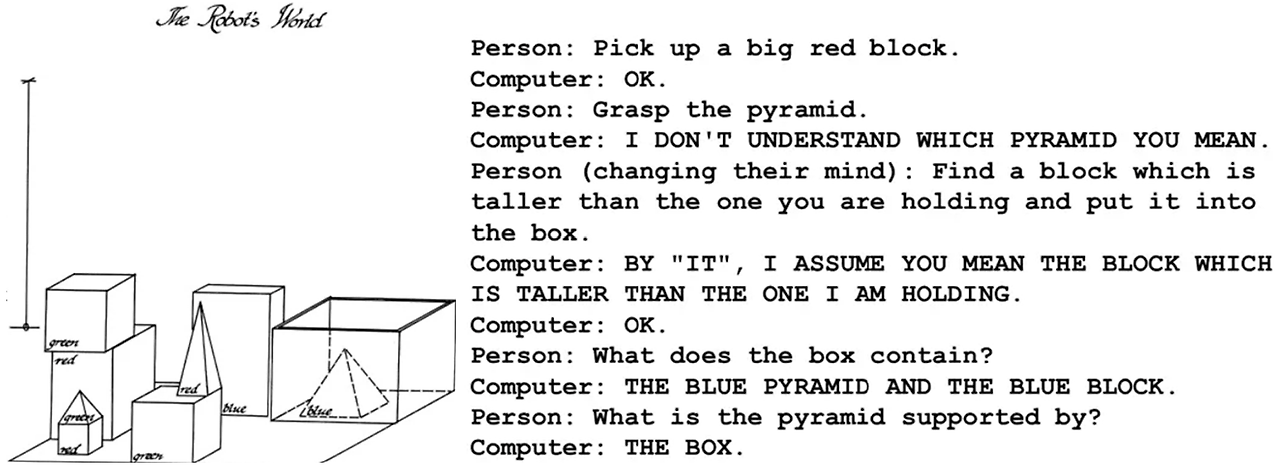

| (w01) NLP applications (0) | 2024.04.17 |