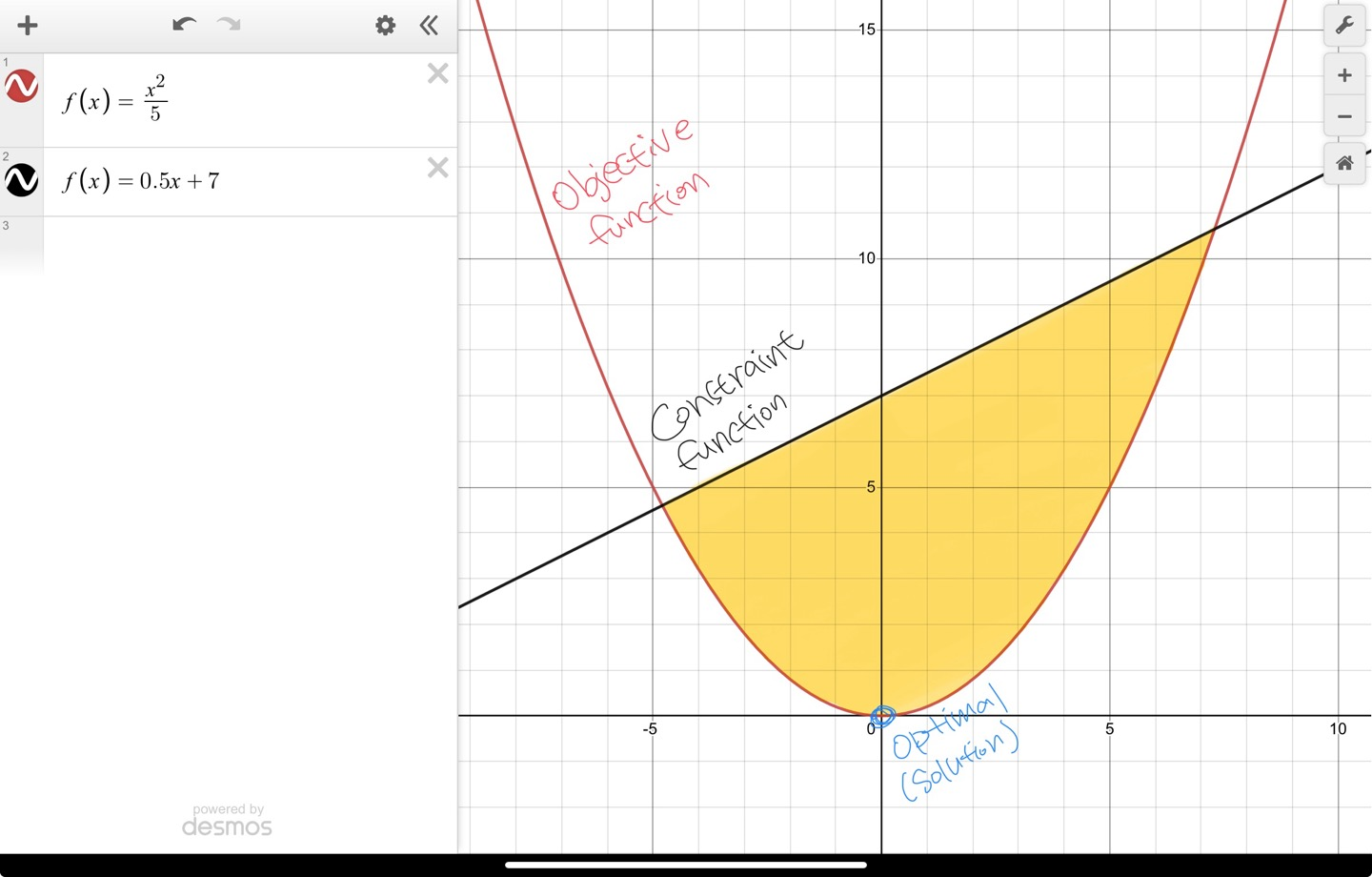

A least-squares problem is an optimisation problem with no constraints and an objective, which is as follows:

minimise

$$ f_0(x)=\left\|Ax-b \right\|_2^2=\sum_{i=1}^{k}(a_i^Tx-b_i)^2 $$

The objective function is a sum of squares of terms of the form

$$ a_i^Tx-b_i $$

The solution can be reduced to solving a set of linear equations,

$$ f(x)=\left\| Ax-b\right\|_2^2=(A_x-b)^T(Ax-b) $$

$$ =((Ax)^T-b^T)(Ax-b) $$

$$ =x^TA^TAx-b^TAx-x^TA^Tb+b^Tb $$

If x is a global minimum of the objective function, then its gradient is the zero vector.

$$ \triangledown f(x)=(\frac{\partial f}{\partial x_1},...,\frac{\partial f}{\partial x_n}) $$

The gradients are:

$$ \triangledown(x^TA^TAx)=2A^TAx, \triangledown(b^TAx)=A^Tb, \triangledown(x^TA^Tb)=A^Tb $$

Calculate these gradients with respect to

$$ x_1,...,x_n $$

Thus, the gradient of the objective function is

$$ \triangledown f(x)=2A^TAx-A^Tb-A^Tb=2A^TAx-2A^Tb $$

To find the least squares solution, we can solve

$$ \triangledown f(x)=0 $$

Or equivalently

$$ A^TAx=A^Tb $$

So we have the analytical solution:

$$ x=(A^TA)^{-1}A^Tb $$

To recognise an optimisation problem as a least-squares problem, we only need to verify that the objective is a quadratic function.

'ConvexOptimisation' 카테고리의 다른 글

| Regularised least squares (RLS) (0) | 2024.01.22 |

|---|---|

| Mathematical optimisation problem (basic concept) (0) | 2024.01.16 |